Stacey Wales stood visibly emotional at the lectern, her voice cracking as she implored the judge to impose the harshest sentence possible for the man found guilty of killing her brother, Christopher Pelkey. The courtroom in Phoenix was taken aback when an AI-generated video appeared, showcasing an animation of her brother offering forgiveness to the shooter.

The presiding judge expressed admiration for the video, subsequently sentencing the shooter to a 10.5-year prison term — the harshest sentence allowed and more severe than the prosecution had requested. Not long after the May 1 hearing, the defense filed a notice of appeal.

Although Jason Lamm will not handle the appeal process, he pointed out that the higher court might examine whether the AI-generated video inappropriately influenced the judge’s decision. This comes at a time when courts nationwide are exploring the appropriate integration of artificial intelligence within legal proceedings. Prior to the Pelkey family’s use of AI for a victim impact statement — a potential first in U.S. legal history — the Arizona Supreme Court had already initiated a committee to explore best practices for AI.

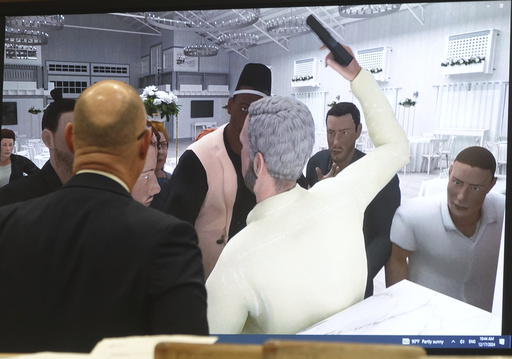

In a separate instance in Florida, a judge tried on a virtual reality headset to experience a defendant’s perspective in a self-defense case, ultimately dismissing the defendant’s self-defense argument. Similarly, in New York, a litigant used an AI-crafted avatar to represent himself in court. However, judges quickly identified that the person on the screen was not genuine.

Experts highlight that incorporating AI into legal settings raises substantial legal and ethical challenges, particularly if it has the power to influence judicial outcomes disproportionately. Such technology can potentially skew benefits towards litigants with greater resources, arguably deepening inequities, especially in cases involving disadvantaged communities.

David Evan Harris, an AI deep fakes expert from UC Berkeley, emphasized AI’s persuasive capabilities and suggested that research into the crossover of AI and manipulative strategies is ongoing. Meanwhile, Cynthia Godsoe, a Brooklyn Law School professor and ex-public defender, indicated that this evolving technology demands that courts evaluate issues beyond the realm of conventional precedent. Queries around whether AI-produced content accurately represents witness testimony or alters suspect details like height or skin color are just the tip of the iceberg.

“It’s a troubling trend,” Godsoe reflected, cautioning against the prospect of deeper engagement with fabricated evidence that might evade detection. In the Arizona case, Stacey Wales shared that she considered the moral implications of scripting words for her brother and using his digital likeness for a courtroom message.

“Ensuring ethical integrity was crucial for us. We didn’t leverage it to make Chris say anything he wouldn’t have,” Wales noted. The use of digital formats for victim impact statements is permissible in Arizona, confirmed victims’ rights attorney Jessica Gattuso, who represented the family.

Only Wales and her husband were privy to the video before its courtroom screening. Their motivation was clear — to lend Chris a humane voice in the judge’s eyes. Judge Todd Lang appreciated the AI commentary, acknowledging its emotional resonance and hinting at the family’s character.

Moreover, the defense’s appeal will likely scrutinize Judge Lang’s acknowledgment of the AI video as a potential inflection point warranting sentence reevaluation.