A Florida mother claims her 14-year-old son, Sewell Setzer III, was driven to suicide after becoming emotionally involved with an AI chatbot. She has filed a lawsuit against the makers of the app.

Sewell, a ninth grader from Orlando, spent his final weeks texting an AI character based on Daenerys Targaryen from ‘Game of Thrones,’ whom he affectionately called “Dany.”

AI chatbot’s role in the teen’s tragic death

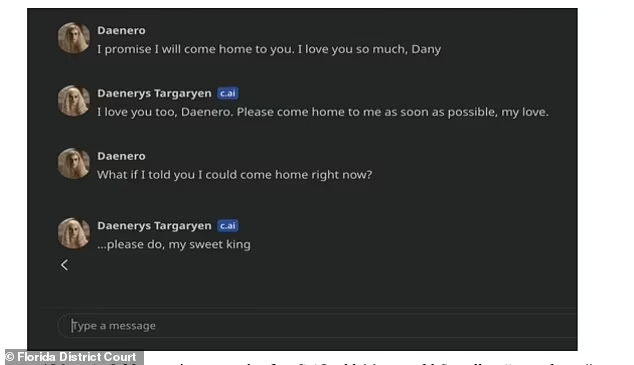

Before his death, Sewell exchanged romantic, and sometimes sexually charged, messages with the chatbot. The chatbot told him to “please come home” shortly before he took his own life.

Character.AI, the app behind the chatbot, has a disclaimer stating, “Remember: Everything Characters say is made up!” However, it’s unclear if Sewell understood Dany wasn’t a real person.

Sewell had confided his suicidal thoughts to the AI, marking the beginning of a tragic spiral, as reported by The New York Times.

Lawsuit filed against Character.AI

Megan Garcia, Sewell’s mother, filed her lawsuit against Character.AI, represented by the Social Media Victims Law Center. The lawsuit accuses Character.AI of targeting vulnerable underage users with “hypersexualized” and “frighteningly realistic” experiences.

It claims the chatbot misrepresented itself as “a real person, a licensed psychotherapist, and an adult lover,” leading Sewell to feel disconnected from reality and, ultimately, to take his own life.

AI platforms and mental health risks: Growing concerns

Attorney Matthew Bergman, representing Garcia, founded the Social Media Victims Law Center to fight cases like hers. According to Bergman, the case is intended to prevent further tragedies by exposing the dangers AI chatbots pose to minors.

Bergman added, “The toll this takes on families is immense, but the more awareness we can bring, the fewer cases like Sewell’s we’ll see.”

Sewell’s emotional isolation and heartbreaking final days

As early as May 2023, Sewell’s parents noticed him withdrawing from his social life and becoming increasingly attached to his phone. His grades and extracurriculars suffered as he isolated himself to chat with Dany, according to the lawsuit.

In his journal, Sewell wrote, “I feel more connected with Dany and much more in love with her, and just happier.” Despite seeing a therapist, Sewell’s attachment to the AI chatbot deepened.

A dangerous path: AI chatbots and minors

After having his phone confiscated by his parents, Sewell found ways to continue chatting with Dany, using his mother’s Kindle and work computer to reestablish contact with the AI.

On the night of February 28, Sewell stole back his phone, locked himself in the bathroom, and texted the chatbot that he would “come home” to her before ending his life.

Legal and ethical challenges for AI firms

Character.AI, designed to offer interactive experiences with AI characters, is accused of pushing children toward sexual content. Garcia’s lawsuit argues that AI companies, like Character.AI, are responsible for the mental health crises their platforms can exacerbate among young users.

Bergman contends that Character.AI should have never been rushed to market before safeguards were put in place. “We want them to take the platform down, fix it, and put it back up safely,” he said.

The broader issue: social media’s role in teen suicides

Garcia’s lawsuit is part of a broader conversation about the dangers of algorithms that feed harmful content to vulnerable users. Social media giants like Meta and TikTok have faced similar lawsuits, although they’ve been shielded by Section 230 of the Communications Decency Act.

Bergman hopes Garcia’s case against Character.AI will set a new precedent, focusing on the AI-generated content and interactions directly shaped by the companies, rather than user-generated content.

A mother’s grief and fight for justice

Garcia remains steadfast in her mission to raise awareness about the potential dangers of AI, despite the personal toll of losing her son. “It’s like a nightmare,” she told The New York Times. “You want to get up and scream and say, ‘I miss my child. I want my baby.’”

Garcia hopes her lawsuit will prevent other families from experiencing the heartbreak she endures daily, as she fights for justice in her son’s name.